CI-Lab is a Research Group affiliated with the Institute of Advanced Computing Technology (ACT), School of Computer Science and Engineering (SCSE), Beihang University (BUAA).

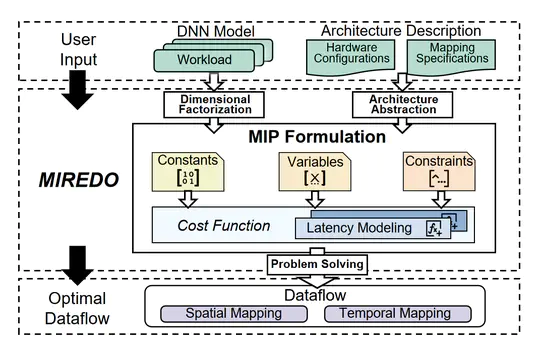

👏 Paper title: MIREDO: MIP-Driven Resource-Efficient Dataflow Optimization for Computing-in-Memory Accelerator. Computing-in-Memory (CIM) architectures have emerged as a promising solution for accelerating Deep Neural Networks (DNNs) by mitigating data movement bottlenecks. However, realizing the potential of CIM requires specialized dataflow optimizations, which are challenged by an expansive design space and strict architectural constraints. To address these limitations, we propose the MIREDO framework, which formulates dataflow optimization as a Mixed-Integer Programming (MIP) problem. MIREDO introduces a hierarchical hardware abstraction coupled with an analytical latency model designed to accurately reflect the complex data transfer behaviors within CIM systems. By jointly modeling workload characteristics, dataflow strategies, and CIM-specific constraints, MIREDO systematically navigates the vast design space to determine the optimal dataflow configurations. Evaluation results demonstrate that MIREDO significantly enhances performance, achieving up to 3.2× improvement across various DNN models and hardware setups. [related project]

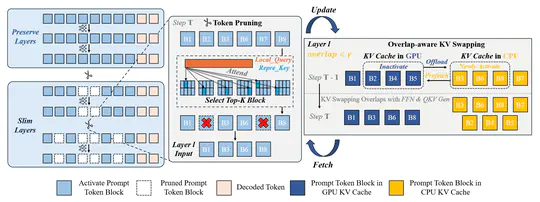

👏 Paper title: SlimInfer: Accelerating Long-Context LLM Inference via Dynamic Token Pruning. In this paper, we identify an information diffusion phenomenon in LLMs, where information from critical tokens spreads across the sequence, allowing aggressive pruning in later layers. Based on this insight, we propose SlimInfer, an inference framework that performs dynamic block-wise pruning on hidden states and introduces a predictor-free, asynchronous KV cache manager. This approach efficiently overlaps I/O with computation, achieving up to 2.53× TTFT speedup and 1.88× end-to-end latency reduction on LLaMA-3.1-8B-Instruct without sacrificing accuracy on long-context benchmarks. [related project]

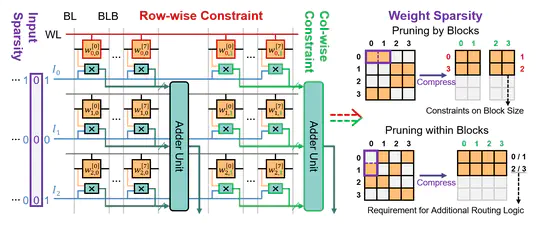

👏 Paper title: CIMinus: Empowering Sparse DNN Workloads Modeling and Exploration on SRAM-based CIM Architectures. In this paper, we propose CIMinus, a cost modeling framework that enables efficient design space exploration for sparse DNN workloads on SRAM-based compute-in-memory (CIM) architectures. We introduce FlexBlock, an expressive sparsity abstraction that captures diverse structured sparsity patterns under CIM hardware constraints. CIMinus provides an integrated workflow from model pruning to system-level evaluation, accurately estimating speedups and energy savings within a 5.27% error margin compared to recent CIM designs. [related project]

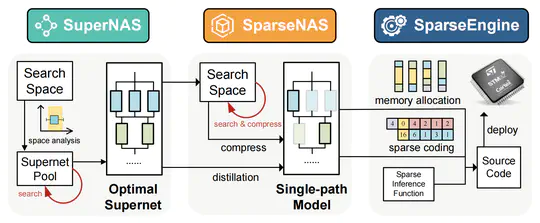

👏 Paper title: TinyFormer: Efficient Sparse Transformer Design and Deployment on Tiny Devices. In this paper, we propose TinyFormer, a framework specifically designed to develop and deploy resource-efficient transformer models on Microcontrollers (MCUs). TinyFormer integrates architecture search (SuperNAS), sparse model optimization (SparseNAS), and an automated deployment tool (SparseEngine). Experimental results on CIFAR-10 demonstrate that TinyFormer can design efficient transformers achieving 96.1% accuracy while adhering to strict hardware constraints (1MB storage and 320KB memory), delivering up to 12.2× inference speedup compared to the CMSIS-NN library. [related project]

👏 Paper title: Towards Affordable, Adaptive and Automatic GNN Training on CPU-GPU Heterogeneous Platforms. In this paper, we introduces A3GNN, a framework for Affordable, Adaptive, and Automatic GNN training on heterogeneous CPU-GPU platforms. It improves resource usage through locality-aware sampling and fine-grained parallelism scheduling. Moreover, it leverages reinforcement learning to explore the design space and achieve pareto-optimal trade-offs among throughput, memory footprint, and accuracy. [related project]

👏 Paper title: ACE-GNN: Adaptive GNN Co-Inference with System-Aware Scheduling in Dynamic Edge Environments. In this work, we present ACE-GNN, the first adaptive GNN co-inference framework for dynamic edge environments. By integrating system-level abstraction with novel prediction methods, ACE-GNN enables rapid runtime scheme optimization and adaptive scheduling between pipeline and data parallelism. Coupled with efficient batching and specialized communication middleware, ACE-GNN achieves up to 12.7× speedup and 82.3% energy savings, significantly outperforming existing co-inference solutions. [related project]

👏Paper title: CIMFlow: An Integrated Framework for Systematic Design and Evaluation of Digital CIM Architectures

In this paper, we introduce CIMFlow, an integrated framework that systematically bridges compilation and simulation with a flexible ISA tailored for digital Compute-in-Memory (CIM) architectures. CIMFlow addresses critical design challenges such as limited SRAM capacity through advanced partitioning and parallelism strategies, providing comprehensive and flexible exploration capabilities for digital CIM architecture design. Compared to existing tools, CIMFlow significantly improves exploration flexibility and performance, achieving up to 2.8× speedup and 61.7% energy reduction across various deep learning workloads.

[related project]