optimizing Memory Efficiency of Graph Neural Networks on Edge Computing Platforms

Image credit: Unsplash

Image credit: Unsplash

Abstract

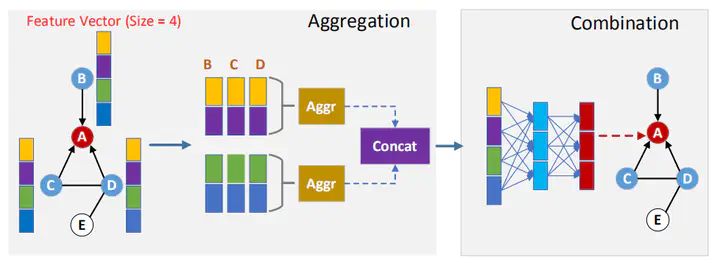

Graph neural networks (GNN) have achieved stateof-the-art performance on various industrial tasks. However, the poor efficiency of GNN inference and frequent Out-OfMemory (OOM) problem limit the successful application of GNN on edge computing platforms. To tackle these problems, a feature decomposition approach is proposed for memory efficiency optimization of GNN inference. The proposed approach could achieve outstanding optimization on various GNN models, covering a wide range of datasets, which speeds up the inference by up to 3×. Furthermore, the proposed feature decomposition could significantly reduce the peak memory usage (up to 5× in memory efficiency improvement) and mitigate OOM problems during GNN inference.

Type

Publication

In RTAS 2021

Click the Cite button above to demo the feature to enable visitors to import publication metadata into their reference management software.